So, you’ve seen the appeal of microservices and have decided to take the leap. Great, now what? What tools and expertise do you need in order to get started? What processes do you need to put in place? These are the questions that today’s article seeks to answer. This is not a technical deep dive, nor does it cover every use case (public cloud deployments and serverless technologies, for example, are not mentioned), but it will provide a high-level guide to the elements that are most crucial to success for an in-house microservices venture. Starting with…

Culture

Culture is a critical aspect of making the transition to microservices work. Specifically, development culture, operations culture, and the bridging concept between the two: DevOps. Changes to operational and development culture mostly come down to smashing the wall that usually separates these two disciplines. You are probably familiar with the sometimes-hostile relationship that exists between the staff charged with producing new and interesting functionality and the staff responsible for maintaining a stable environment. Communication between the two groups can be limited to staccato and not terribly insightful comments, most of which boil down to something like:

- Operations: Your application is broken!

- Development: It works on my machine; your environment is broken!

This type of exchange fosters animosity, inhibits productivity, and does absolutely nothing to advance the innovation needs of the business. It is born of the false impression that the two groups have different objectives and, sometimes, out of the disparity between how the two groups are treated. The truth is that both groups exist to serve the needs of the business. The antagonism stems from the failure to combine two sides of the same objective in a cooperative manner. The DevOps approach is meant to address this by partnering development and operational concerns within the same process. How? Well let’s begin with a simple definition of DevOps. DevOps is a process driven approach for combining agile development practices with agile operations practices in order to reduce the delivery time of fixes and new features. Thus, bringing both development and operations more closely in line with the needs of the business. Instituting it requires some foundational ingredients: Open communication, knowledge sharing, and a common sense of success/failure across team boundaries. Much has already been written on how to facilitate these changes (for example, this article on devops.com), so I won’t rehash all of it here, but expect some growing pains and do not expect it to happen without some pressure from on high.

Once you’ve established a more convivial and productive relationship between your operational and development staff it’s time to start addressing the way they view their roles and how those roles can support one another. Two excellent places to start are with the 12 factors (for microservice development practices) and the three ways described in both “The DevOps Handbook” and “The Phoenix Project: A Novel About IT, DevOps, and Helping Your Business Win”.

The infrastructure

We are also going to need to put some new tools in place and/or modify the way we use some existing ones. Specifically, we’ll need to place our applications in containers, to have a container scheduling platform for managing our microservices, to configure tools for both continuous integration and continuous deployment (CI/CD), and to setup monitoring tools to keep tabs on how all of our busy little microservices are doing. Down the road, we may also want some other things like: A messaging bus, an orchestration engine, API management, and a service mesh. But these items are not necessarily needed at the beginning and are beyond the scope of this article. So, let’s get to it, starting with:

Containers

A container is a barebones OS wrapper for our application which includes all of the direct dependencies our application needs to run. Docker is the undisputed champion in this arena (at least for the time being). There ARE other container technologies out there, but none approaches the market penetration achieved by Docker. One can find a bevy of online resources to help get Docker up and running such as the excellent collection of online labs available at Katacoda. If these don’t suit your needs, a quick Google search will yield instructions for nearly any given language or application platform.

Okay, so the question of our container type may be settled, but we will still need to select our container scheduler (the thing that actually tells your containers when, where, and how many to run).

Container Schedulers

By contrast to containers themselves, the scheduler marketspace has become increasingly crowded, with products featuring a range of ease and configurability. The options can involve a certain amount of vendor lock-in and can be a little overwhelming at the start. Our clients run the gamut in terms of which ones they’ve adopted, but our recommendation for those just starting out is Docker Swarm. Docker Swarm provides a (nearly) turn-key solution without inhibiting the migration path to more highly configurable options such as Kubernetes. Open source solutions like Docker Swarm can also help keep the cost and scope of change to a manageable level while still providing the functionality necessary to get the microservices ball rolling. But, in order to get our applications out of the development environment and into production in a systematic and repeatable way, we’ll need a…

Continuous Integration/Continuous Deployment (CI/CD) Platform

Chances are, you already have a Continuous Integration (CI) platform of some kind in place. Maybe it’s an open source solution like Jenkins, maybe it’s a commercially provided one like Atlassian Bamboo. If you do, great! The creators of your particular CI solution or its user community have probably put together a guide on how you can extend your automated build process to incorporate Continuous Deployment (CD). For example, this excellent guide on configuring Jenkins for CD. However, we must caution you that CI/CD solutions (like all critical applications) need to be properly configured AND managed. If there is no one in your organization explicitly tasked with keeping your CI/CD solution up to date and working properly you may leave yourself open to security vulnerabilities, build failures, and lost productivity. But fear not, development shops that lack the man-power or the expertise to properly manage a CI/CD solution themselves can turn to online services such as GitLab, CircleCI, or Travis CI to do it for them. These services are often relatively inexpensive and have excellent documentation and support. If you don’t yet have a CI solution in place and prefer to do it in-house, we humbly suggest Jenkins as a starting point. It is well-documented, widely used, extensible, and open source. Having said that, any of these CI/CD solutions will help us to automate…

Testing

Microservices promise us the ability to shorten our development and deployment lifecycles IF we can adhere to certain principles. One of those being the notion of failing fast. The fail-fast concept applies to various stages of the microservice lifecycle and can be roughly defined as “identifying potential faults as early as possible”. This concept has implications across a microservice’s entire journey from a code project to an active production service, but it typically involves automated testing and begins with the most common type of automated testing: Unit tests. Unit tests, for those unfamiliar, are a critical part of the development process wherein (ideally) all of our project’s code is tested in the most granular way possible and each time our software is compiled. These tests are actually part of the project themselves and so travel with the code wherever it goes. This allows automated build systems to measure, track, and report on the viability of our code as it evolves over time. We even have tools that help us measure the unit test code coverage (meaning the total percentage of our code for which we have test scenarios in place). These unit tests are critical to ALL development, but become doubly important if we want to fully leverage the advantages conferred by microservices.

However, our need for testing doesn’t stop there. Our microservice workflows are often composed of many infrastructure elements including: Other microservices, commercial off-the-shelf (COTS) products, public and/or UI-facing APIs, and others. This necessitates testing not only the code itself, but also the integration points exposed by our microservices and the “runtime” integration processes that tie our workflow components together. This type of integration testing can be performed using consumer driven contracts. Consumer driven contracts explicitly define rulesets for how functionality is exposed (e.g. as a specific REST service) and what precisely will happen when that functionality is invoked by an external component. This is seen as a less brittle, more precise, and more efficient approach to validating the “connecting tissue” of our workflows than end-to-end testing.

Consider the need to validate one modified component in a workflow consisting of five or more microservices. We could fire up the entire workflow ecosystem, kick off the workflow at its starting point, and then measure the final output when the workflow has completed. But is this efficient? Will we understand quickly whether or not there is a potential for failure? Will we understand precisely where a problem occurs? What if human interaction is required at some stage of the workflow? These are some of the questions we may be able to side-step by reducing the frequency of our (preferably automated) end-to-end testing and favoring more regular use of consumer driven contracts. It is not always possible to avoid end-to-end testing entirely, but hopefully we now have some insight into why it might not always be the optimal solution.

Environmental parity

If we really hope to fail-fast during the testing of our microservices it is important to make our development, testing, and staging/QA environments as representative of our production environment as possible. This idea of instituting environmental parity between the various stages of our deployment lifecycle is key to our fail-fast approach and is aided by tools that allow us to automatically promote our microservices from development all the way into production. The more similar each environment is to production, the more likely we are to discover problems that might otherwise only appear under production circumstances.

No matter how well we replicate our production environment in development, no matter how many tests we may have in place, we will still need…

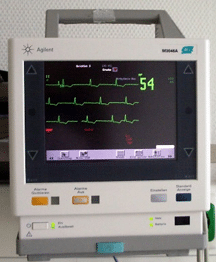

Monitoring

So testing is all well and good, but what about reacting to the issues our tests did not reveal? What about predicting faults before they happen? How can we further exploit our environmental parity to discover what might go wrong BEFORE we hit production? The answer is monitoring and lots of it! There are a number of commercial solutions, simple and sophisticated, that could potentially meet your monitoring needs, but at the beginning we recommend pairing the open source solutions Prometheus and Grafana. Respectively, they provide metrics monitoring and time series analytics and graphical representation. Setup instructions can be found here and the Internet is home to many more guides and tutorials. This is a particularly effective opening strategy if you use it in conjunction with a façade like micrometer which will provide vendor neutral access to client instrumentation allowing you to more easily swap out the metrics technology at a later date. There are also an ever expanding number of service providers (such as DATADOG and Dynatrace) that can help ease the burden of monitoring your microservices.

Another important consideration when it comes to monitoring is how we will manage our microservice logs. Some (if not all) of our microservice instances will be ephemeral, meaning that any logs that they may have generated over the course of their deployment will be lost. We will need to consider log aggregation strategies in order to track service health issues that might not manifest themselves in pure metrics. Here, we can turn to the syslog protocol for log propagation and tools like Splunk and Elastic Stack or services like Sumo Logic to aggregate and analyze the results.

Where do we go next?

So, there we have it, a high-level overview of the process to get our microservices operation off the ground. In future articles, we’ll talk about more advanced topics such as creating APIs. We’ll discuss all of the related subjects like API composition, gateways, management & governance. We will also talk about messaging strategies, event sourcing, orchestration vs. choreography, and service meshes. Finally, we’ll start unravelling the mystery of monolith deconstruction. So, stay tuned, and please feel free to reach out to us at info@smartwavesa.com if you’d like to discuss how we can help you start your microservices journey.